We live in an environment that is drenched in misinformation, “fake news”, and propaganda, not because of an unavoidable accident but because it has been created by people in pursuit of political and economic objectives.

This is not a natural disaster, it’s human-made. On a positive side, this implies that, unlike for earthquakes or tsunamis, a solution is likely to exist and ought to be achievable. On the negative side, it means that the solution is unlikely to involve more communication alone.

Misinformation does not just misinform, it also undermines democracy by calling into question the knowability of information altogether. And without knowable information deliberative democratic discourse becomes impossible.

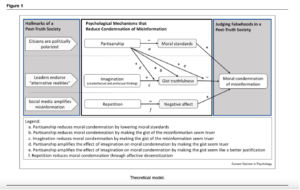

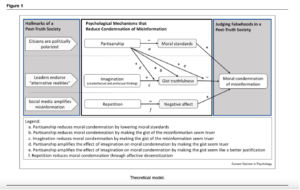

In a post-truth world, purveyors of misinformation need not convince the public that their lies are true. Instead, they can reduce the moral condemnation they receive by appealing to our need to belong, our tribalism, our partisanship. This is done by convincing us a falsehood could have been true or might become true in the future (imagination), or simply exposing us to the same misinformation multiple times (repetition).

Because of this, misinformation sticks. Erasing “fake news” from one’s memory is a challenging task, even under the best of circumstances. Researchers Walter & Murphy found that correcting real-world misinformation is more challenging than constructed misinformation.

It takes little imagination to realise that misinformed individuals are unlikely to make optimal decisions, and that even putting aside one’s political preferences, this can have adverse consequences for society as a whole.

Corrections therefore inevitably play a catch-up game with misinformation and the corrections may be outpaced by falsehoods.

Let’s dig into this

As lies and “fake news” flourish, citizens appear not only to believe misinformation, but also to condone misinformation they do not believe by giving it a moral pass. When people condone misinformation, leaders can tell blatant lies without damaging their public image, and people may have little compunction about spreading misinformation themselves. To understand our “post-truth” era, we therefore need to understand not only the psychology of belief, but also the moral psychology of misinformation.

The three hallmarks of post-truth society are that

a) Citizens are politically polarised,

b) leaders endorse “alternative realities,” and

c) technology amplifies misinformation.

Each hallmark at the societal level is associated with a psychological factor at the individual level. This is hopeful as we can build intention around noticing and interrupting these patterns.

The three psychological factors that encourage people to condone misinformation are partisanship, imagination, and repetition. These influences lower moral standards and reduce moral condemnation of misinformation by convincing people that a lie’s “gist” is true and dulling affective reactions which also amplify partisan disagreement and polarisation.

Motivated and cognitive processes both offer plausible accounts for how we become manipulated through dishonesty and deception (see image below) – Perhaps because supporters and followers want to excuse misinformation that fits with their beliefs, they set lower moral standards for behaviour that serves their own supporter interests.

Follow from the Legend in the image above to explore:

a) Partisanship, Followers and Supporters – may judge the gist or general idea of a falsehood as truer when it fits with their prior knowledge path, and

b) the truer a falsehood’s gist, the less unethical the falsehood may seem.

c) Imagination: another hallmark of post-truth society is that many citizens eschew facts and evidence to inhabit “alternative realities” endorsed by leaders and other influencers. However, to increase people’s inclination to condone a falsehood, it may not be necessary to make them believe in alternatives to reality; it may be sufficient to get them to imagine such alternatives.

For instance, after lying about Trumps’ inauguration’s size, Trump’s administration suggested that attendance might have been higher if the weather had been nicer.

Imagining how a falsehood could have been true or might become true make the falsehoods seem less unethical to spread, which in turn results in weaker intentions to punish the speaker, and stronger intentions to like or share the falsehood on social media.

Simply imagining without believing alternatives to reality can soften moral judgments of misinformation. Misinformation such as this invite us to imagine two different types of alternative to reality: a counterfactual world in which the falsehood could have been true, and a pre-factual world in which it might become true.

Research suggests that imagining either alternative to reality can reduce how much people condemn misinformation, even when they recognise the misinformation as false.

d) Imagination can also increase the partisanship of people’s reactions to dishonesty. Imagining how a falsehood could have been true or could become true reduces followers’ moral condemnation of falsehoods to a greater extent when those falsehoods fit, versus conflicted, with their beliefs.

e) Even when partisans agree that the gist of the falsehood is true, they may disagree about how justified it is to tell a falsehood with a truthful gist

f) Repetition: Repeated exposure to misinformation reduces moral condemnation.

A third hallmark of a post-truth society is the existence of technologies, such as social media platforms, that amplify misinformation. Such technologies allow fake news “articles that are intentionally and verifiably false and that could mislead readers to spread fast and far sometimes in multiple periods of intense “contagion” across time.

When fake news does “go viral,” the same person is likely to encounter the same piece of misinformation multiple times. Research suggests that these multiple encounters may make the misinformation seem less unethical to spread.

This phenomenon seems to occur because repetition reduces the negative affective reaction people experience in response to fake news. When people first encounter a specific fake-news headline (and recognise it as fake), they may experience a “flash of negative affect” that informs their moral judgments. For example, anger that someone would spread that piece of misinformation may lead people to judge it as particularly unethical. But repeatedly encountering the same headline dulls this anger. Because affect informs moral judgments, reduced anger means less severe moral judgments.

This mechanism predicts, and experiments confirm, that wrongdoings across the moral domain (and not just fake-news sharing) seem less unethical when repeatedly encountered

Look out for messages that focus on Negatively charged messages, Incoherence, Inconsistency and Paltering

People are generally more likely to share messages featuring moral–emotional language (Brady et al., 2017), and this tendency may be amplified by people’s negativity bias. This is the human proclivity to attend more to negative than to positive things (Soroka et al., 2019).

We tend to seek out information that will confirm our pre- existing view of the world and avoid information that conflicts with what we already believe.

As Brulle et al. (2012) noted specifically in the context of climate change, (I would add whistleblowing) “introducing new messages or information into an otherwise unchanged socio-economic system will accomplish little”. Instead, we need to pursue multiple avenues to contain misinformation and redesign the information architecture that facilitates its dissemination.

Incoherence is a frequent attribute of conspiracy theories as well as denial. Incoherent arguments are, by definition, suspect and should be dismissed. Other techniques involve false dichotomies (“Either you are with us, or you are with the terrorists”; George W. Bush, 21 September 2001), scapegoating, ad hominem argumentation, and emotional manipulation (e.g., fear-mongering). (See my previous blog on Paltering)

What can we do about these human vulnerabilities to misinformation? How can we stem the spread of misinformation in this post-truth world?

Anti-misinformation efforts commonly aim to improve discernment between fact and fiction, or to make the inaccuracy of fake news more salient. Besides, such interventions will be insufficient if people intentionally spread misinformation they do not believe.

Rather than (only) trying to make people less credulous, future interventions should consider nudging them to be less morally tolerant of dishonesty and a lover of the truth

Human beings are cognition truth detectors and it follows that if people can be trained to detect flawed argumentation those skills might inoculate them against being mis- informed Research in this field suggests a psychological inoculation process consisting of two core elements, including:

1) a warning to help activate threat in message recipients (to motivate resistance), and

2) refutational pre-emption (or pre-bunking).

The two components above are assumed to work together in the following fashion: forewarning people that they are about to be exposed to challenging content is thought to elicit threat to motivate the protection of existing beliefs. In turn, two-sided refutational messages, which involve the threatening information, serve to both teach and inform people as they model the counter-arguing process and provide specific content that can be used to resist persuasive attacks (Compton, 2013; McGuire, 1970).

Let’s return to our ‘Theoretical Model’ image

The three psychological factors reviewed earlier – partisanship, imagination, and repetition affect moral judgments through different psychological pathways and therefore would require different interventions to address.

For example, repetition makes misinformation seem less unethical by dulling people’s affective responses, because affect usually influences moral judgment. It makes sense therefore to encourage people to base their moral judgments on reason rather than affect in order to reduce the effect of repetition on moral judgments.

Nudging people to reason about morality may not prevent repetition from dulling affective responses, but it should decouple affective responses from moral judgments.

Encouraging people to focus on the precise truth of information they encounter, and not just its gist, should reduce their disposition to condone lies that are easy to imagine.

Satirizing these persuasion attempts might inoculate people against their effects. For example, media outlets that want to hold leaders accountable for lying might present implausible prefactuals to communicate that one could imagine scenarios in which anything might become true – e.g., if a reincarnated Steve Jobs took control of Theranos, then Theranos technology would revolutionise healthcare). The Private Eye magazine is a great example of this.

The effect of partisanship on moral judgments may be the most difficult to address because this effect is likely multiply determined. It might help to show people that they use lower moral standards for falsehoods that are aligned versus misaligned with their beliefs (Figure 1, path a), or again, to shift focus away from the falsehood’s gist (Figure 1, path b) but ultimately, reducing partisans’ willingness to condone misinformation may require easing partisan animosity

Simple measures such as infographics and pausing for a few seconds to consider the accuracy of a particular news item have also shown promise.

Pre-emptively exposing individuals to a weakened form of a misleading argument as well as teaching individuals how to refute those arguments triggers the production of ‘mental antibodies’. See coaching and training for Courageous Conversations here

Active inoculation may confer a comparatively stronger inoculation effect because people are encouraged to generate their own antibodies and counterarguments. As participants take an active role in the inoculation process, this allows them to generate internal refutations themselves, which may lead to longer lasting effects owing to increased cognitive involvement.

The Neuroscience on this shows that active inoculation is relevant because inoculation messages are known to change the structure of associative memory networks, boosting nodes (e.g., counter- arguments) as well as the number of linkages between nodes, which helps strengthen people’s ability to resist persuasion (Pfau et al., 2005)

One example of an active, technique-based inoculation intervention is Bad News, a free online browser game in which players learn about six common misinformation techniques known as DEPICT:

Discrediting opponents,

Emotional language use, increasing intergroup Polarisation,

Impersonating people through fake accounts,

spreading Conspiracy theories and

evoking outrage through Trolling

Research on the BAD News game found that the effect of inoculation was most pronounced for those who were most susceptible to misinformation prior to gameplay. Additionally, researchers found that participants rated ‘real’ news as significantly less reliable after playing.

Overall, they found that playing Bad News significantly decreased the perceived reliability of real-world misinformation that made use of one of the DEPICT misinformation techniques.

This is because the Bad News game confers psychological resistance against misinformation techniques used in real-life examples of online misinformation. In line with previous research, it was found that players’ truth discernment, or their ability to distinguish manipulative from non-manipulative information, significantly improved after gameplay.

My final point is that once you’ve built up the anti bodies to psychologically combat misinformation are you sufficiently skilled to communicate via the Courageous Conversation framework in order to defend the truth?

Find out how here: https://speakout-speakup.org/one-to-one-coaching/

Leave A Comment